Digital Optics – Silicon-Based Heterogeneous Integration Opens The Gate for Digital Optics and Innovation

Author(s)

Wan-Jiun LiaoBiography

Prof. Liao is a Distinguished Professor of Electrical Engineering, National Taiwan University (NTU), Taipei, Taiwan. Her research interests include 5G/6G, AI for networking, wireless VR/AR, Blochchain, and IoT. She is a Fellow of IEEE.

Academy/University/Organization

National Taiwan UniversitySource

IEEE ICCE-TW 2020 Special Session on AR/VR Technology for B5G-

TAGS

-

Share this article

You are free to share this article under the Attribution 4.0 International license

- ENGINEERING & TECHNOLOGIES

- Text & Image

- November 23,2020

The efficiency of image rendering is an important factor for immersive experience of wireless virtual reality (VR). However, performing image rendering in wireless VR headsets is inefficient due to their limited computing capability and battery life. 5G Mobile Edge Computing (MEC) could enable immerse experience with good user experience for wireless VR players thanks to its offering of high computational capability in the proximity to wireless VR players for such real-time and computationally intensive tasks. In this work, we developed a smart VR edge over NTU’s 5G MEC testbed, and studied the performance of edge rendering capability as compared with traditional local rendering. We implemented a multiplayer VR dancing game system in which wireless VR players can interact with one another in a virtual space through a complicated 3D game model. The results demonstrate that edge rendering can allow much better quality of user experience in the game.

Virtual reality (VR) allows wireless VR players to interact immersively with one another or with virtual objects in virtual environments. The efficiency of 3D image rendering affects the quality of experience (QoE) for wireless VR players. However, it is inefficient to run 3D image rendering tasks on wireless VR headsets (i.e., local rendering), since they are typically limited in terms of computing resources and battery life. Multi-access Edge Computing (MEC) in 5G was introduced to bring computing capability and storage from the remote cloud to the wireless edge in the proximity of user equipment. With MEC, the VR image rendering can be run in the MEC server (i.e., edge rendering) and the rendering result will then be streamed to wireless VR headsets via wireless connections. Nevertheless, the QoE of wireless VR players is also influenced by the quality of the wireless connection. Thus, 5G/B5G and MEC is promising for wireless VR streaming thanks to the ultra-reliability low latency communications (URLLC) and enhanced Mobile Broadband (eMBB) characteristics of 5G/B5G.

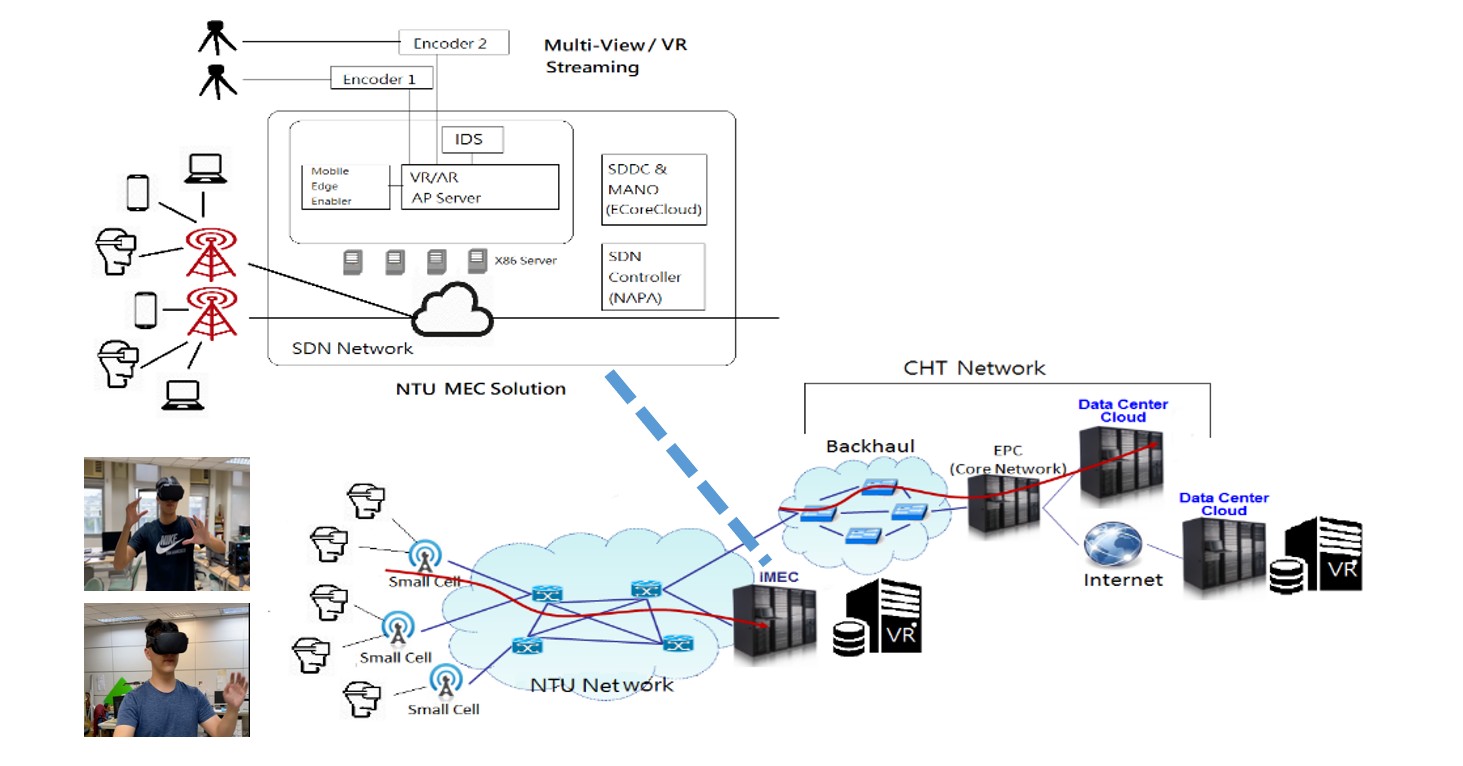

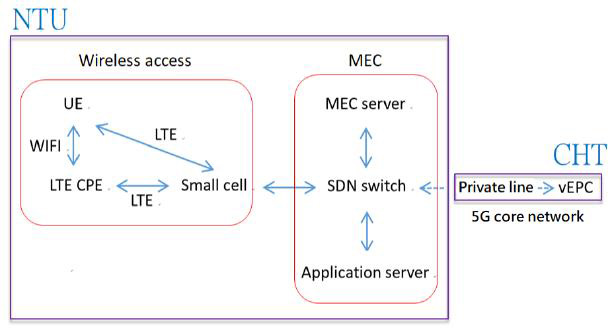

Figure 1. NTU 5G MEC System

In this work, we share our experience in the development of a smart edge for wireless VR streaming at NTU, which enables edge rendering via 5G MEC. The NTU 5G MEC platform consists of three parts, namely wireless access, MEC, and a 5G core network. Both the wireless access and MEC parts are built within NTU, while the 5G core network is managed by Chunghwa Telecom (CHT), Taiwan, as shown in Fig. 1. In the wireless access part, the UE can access the small cell directly or indirectly via the LTE CPE. Then each packet sent from the UE will be forwarded by the small cell to the SDN switch located within the MEC. In MEC, once the SDN switch receives a packet from the UE, it will be forwarded to the MEC server. The MEC server will then check the packet and decide if it should be forwarded to the application server (built by NTU) or to vEPC (located at CHT) via the private line. The application server can provide any type of service. In this work, the focus is on the multi-user wireless VR game service.

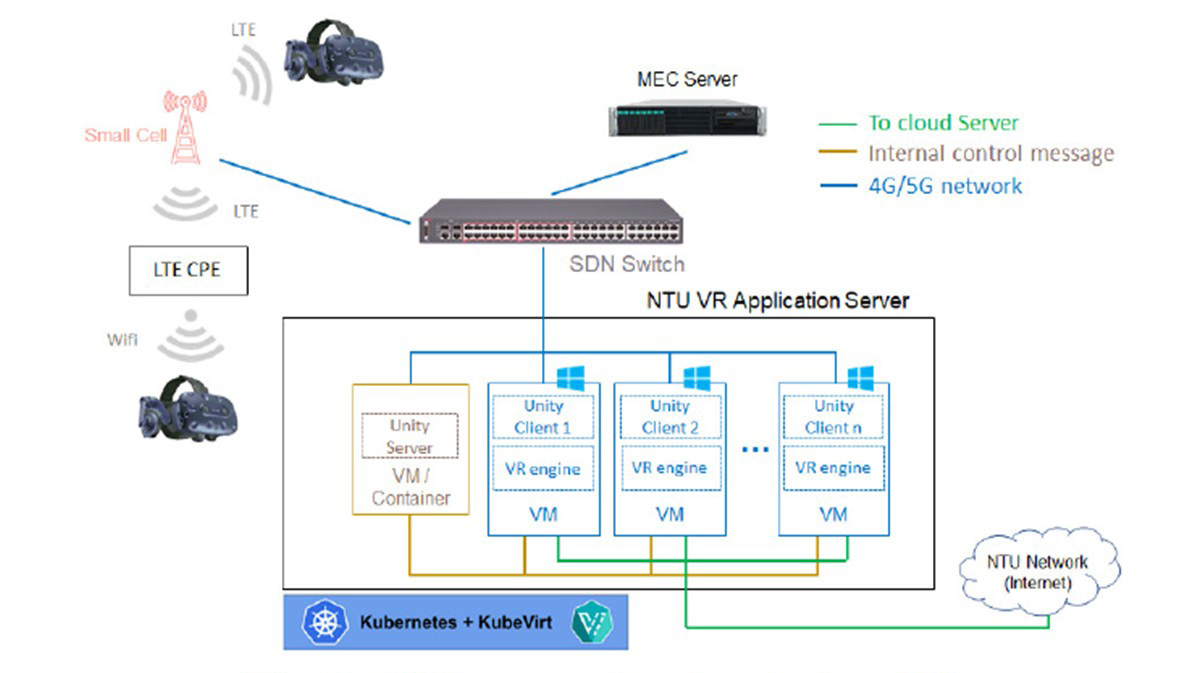

For NTU’s smart edge for wireless VR streaming, as shown in Fig. 2, the virtualization environment of the application server is built by Kubernetes and KuberVirt so that we can allocate any number of virtual machines (VMs)/Containers according to the application needs. Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications, and KubeVirt is the Kubernetes extension that allows the running of traditional VM workloads natively side by side with Container workloads. We have also developed a multi-player VR game based on Unity, which is a real-time 3D development platform. The unity server is installed in one VM, and a unity client and its associated VR engine are installed in the other. The VR engine is implemented via ALVR in which 3D image rendering is performed and then the content of the VR game is streamed to an Oculus Quest headset. Note that if there are two wireless VR players, we need to have two VMs, each of which contains a unity client and a VR engine, to serve each wireless VR player separately.

Figure 2. NTU smart edge for wireless VR services

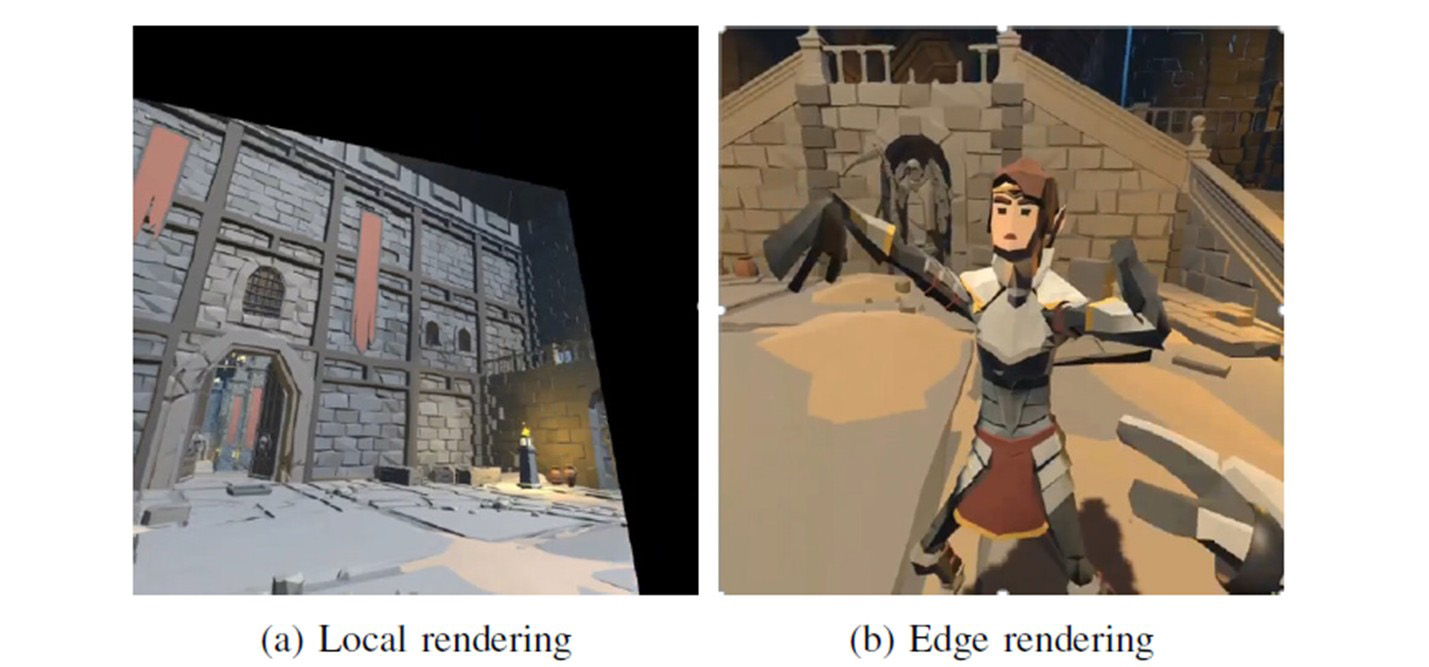

We show the result of two wireless VR players dancing together in the same physical space and interacting with each other in the same virtual space. Fig. 3a and Fig. 3b show the field of view (FoV) generated by local rendering and edge rendering, respectively. For local rendering, some part of the FoV will be a black screen when the wireless VR player is quickly turning his/her head due to having limited computing power in the headset. For edge rendering, the view is very smooth as the wireless VR player turns his/her head.

Figure 3. Performance comparison of different approaches for wireless VR

In this work, we demonstrate a simple multi-player VR dancing game scenario in which two wireless VR players are interacting with each other in the virtual space over NTU’s 5G smart edge for VR streaming. We compare the FoV generated by local rendering and edge rendering when the players are quickly turning their heads. The results show that edge rendering can achieve much better performance and save more of the wireless VR headset’s energy as compared with local rendering. This is only the first step toward VR smart edge in NTU’s 5G MEC testbed. We are currently developing more advanced features for wireless VR, including virtual resource allocation at the smart edge, proactive prediction and caching for VR frames, viewing synchronization for clients at different locations, object sharing in transmissions, and cybersickness reduction for wireless VR players. We will report our results in the future.

STAY CONNECTED. SUBSCRIBE TO OUR NEWSLETTER.

Add your information below to receive daily updates.