A Great Opportunity for Digital Transformation in Traditional Manufacturing Companies

Author(s)

Albert Liu & Bike XieBiography

Albert Liu is an EE PhD from UCLA. He worked in R&D and managerial positions at Qualcomm and Samsung Electronics prior to becoming the founder and CEO of Kneron. Albert owns more than 30 patents and has published more than 70 papers in major international journals.

Bike Xie is a VP of engineering at Kneron, with a focus on system architecture design for AI chips, deep learning model compression, and algorithm design for deep learning applications. He received his Ph.D. degree from UCLA.Academy/University/Organization

Kneron-

TAGS

-

Share this article

You are free to share this article under the Attribution 4.0 International license

- ENGINEERING & TECHNOLOGIES

- Text & Image

- August 17,2021

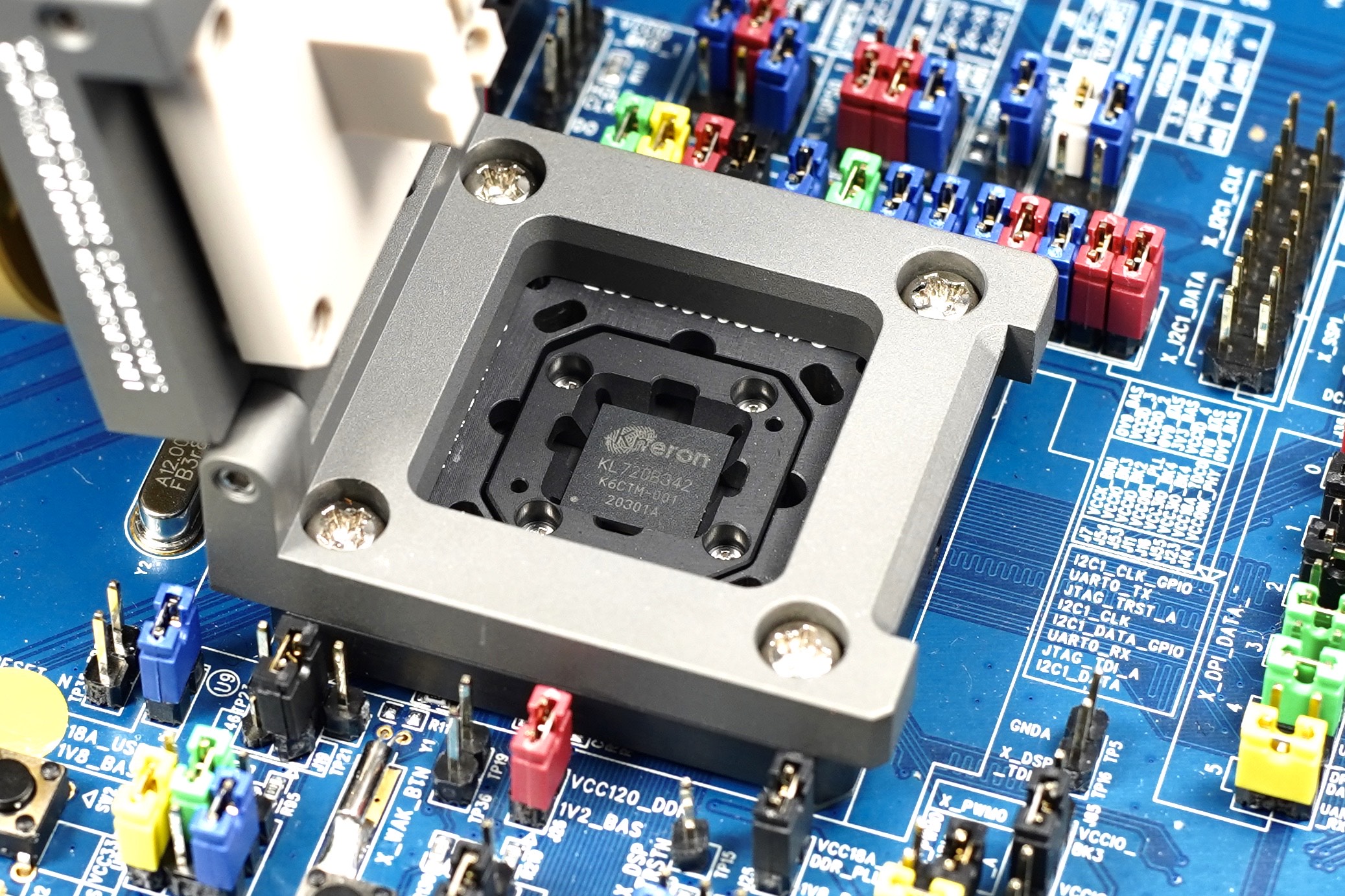

The ability to deploy AI on the edge is key to the realization of various promising applications. The reduction of AI model size without a sacrifice of computing performance is one among the cruxes of this ability. Through quantization, we reduce the size of each calculation (multiplications and accumulates) by almost 50% with an accuracy loss of less than 1%.

AI hardware (chip) is a machine that houses the ability to operate computations, much as the human brain permits us to think. AI software (models) is the necessary processes of computation that enable the derivation of conclusions. Lastly, placing visual, audial, or numeric observations collected by sensors (data) into the brain (chip) wherein the necessary thought process (model) is housed, the apparatus derives patterns and judgements from the provided observations. In a sense, this AI apparatus may be compared to a child. When equipped with a functioning brain, provided with an excellent education in logic and reasoning concerning a specific field, and then exposed to vast amounts of relevant facts, the child is then capable of coming up with accurate or insightful conclusions regarding various phenomena presented by the facts.

Since the 2016 renaissance of AI, the technology has promised to deliver marvelous applications that could lead to an increasingly safe, convenient, and prosperous human society. Among these applications, the autonomous vehicle is one salient example. And yet, fully autonomous vehicles have been long in the making. Indeed, experts expect L0 (conventional), L1 (driver assistance) and L2 (partial driving automation) vehicles to comprise the vast majority of all vehicles on the market beyond the end of this decade. Higher categories of autonomous driving –– L3 (conditional driving automation), L4 (high driving automation), and L5 (full driving automation) –– involve a mélange of complex technical considerations. Among these are three major concerns associated with the current means of deploying AI via cloud technology: Cost, latency, and security. By housing AI algorithms in the cloud, vehicle sensors are required to send collected data to the cloud in order to retrieve inferences. This said, bandwidth is costly, making the technology difficult to integrate. Data transfer creates latency, which could be dangerous in the face of potential driving incidents wherein critical responses must be made in less than a second. Moreover, cloud connectivity exposes vehicles to potential data privacy breeches and hacking. Autonomous driving is one among many examples wherein AI algorithms are better housed on the edge (on-device) rather than in a remote cloud location away from the site of data collection. Consequently, methods for reducing the size of AI models to fit on small, lightweight hardware––without sacrificing computing performance––is key to the ubiquitous deployment of AI.

Figure 1. In order to enable deployment in sensors and small devices, edge AI chips are required to be highly lightweight and energy-efficient.

We herein focus on the aspect of creating more lightweight AI models, we explored the methods of pruning and quantization to downsize AI models. The size of an AI model is equivalent to the number of weights––features built into an AI model to instruct how incoming data (signals) are to be processed –– multiplied by the number of bits used to represent the weight (also known as bitwidth.)

- Pruning: Through the method of pruning, we have been able to reduce the number of weights in a model by 50 percent for the deepest learning models, thus reducing model size.

- Quantization: Whereas it has been common practice to reduce standard 32-bit floating models for object classification and detection into 8-bit models (INT8), we have further been able to halve the bitwidth of these models through a mixed INT4-INT8 quantization solution. Thus, we reduce the size of each calculation (multiplications and accumulates) by almost 50% with an accuracy loss of less than 1%.

As model sizes are halved, so is the energy (watts) required to run the AI model, allowing for the deployment of AI models on edge devices and sensors that could not previously be sensibly reached due to energy constraints in a myriad of applications for autonomous driving, smart infrastructure, and smart homes. This enablement of AI migration from the centralized cloud to the decentralized edge opens up avenues for more cost-effective, swift, and secure applications.

Nonetheless, much work remains to be done between industry and the academic community in order to reduce AI model sizes. There presently exists ample academic research on model compression and quantization. However, most of this research focuses solely upon the convolutional neural network’s (CNN) convolution layers and largely overlooks the non-convolution layers. Furthermore, much of this research concerns very basic applications such as classification and detection. Thus, the research is quite limited in terms of helping industry to develop real-life products. This is in part caused by the fact that researchers cannot access the full set of difficulties (particularly hardware limitations) of non-convolution layers in real products, as each chip is unique. Researchers cannot simply access each of these unique chips. Thus the burden of investigating non-convolution layers has fallen to individual companies. A re-evaluation of synergies between industry and academia could be of much benefit to advances in the deployment of artificial intelligence on the edge. By allowing the ubiquitous proliferation of AI in various small and energy-strapped sensors and devices, we allow data collectors and processors within vehicles, road side units, and other traffic-related equipment to collaborate with more timeliness and effectiveness, ushering in the age of safe and fully-autonomous driving.

STAY CONNECTED. SUBSCRIBE TO OUR NEWSLETTER.

Add your information below to receive daily updates.